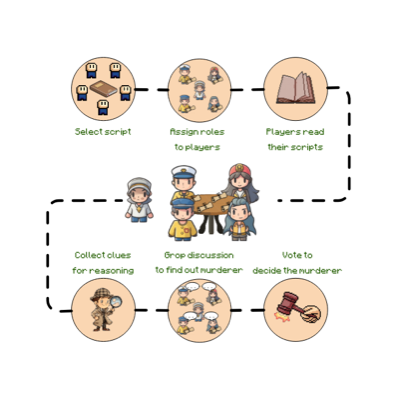

LLMs and Agents

In this research direction, we aim to develop algorithms and systems for Large Language Models and AI Agents.

read more

NLP for Materials Science

In this research project, we aim to develop effective NLP techniques for materials discovery.

read more

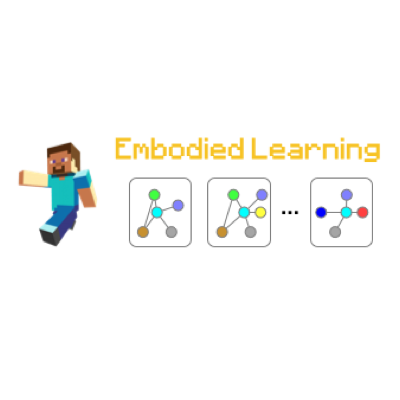

Embodied learning

In this research direction, we aim to develop LLM-based sysmtes to solve multimodal and embodied learning tasks.

read more

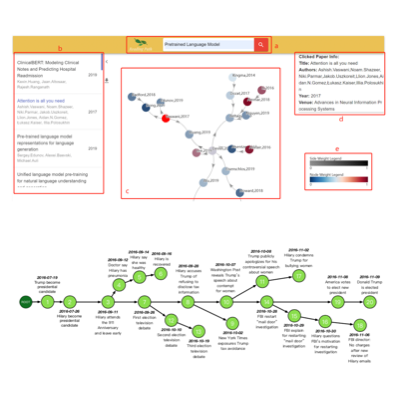

Graph-based NLP

This project aims to develop efficient graph representation, learning, and reasoning techniques for NLP tasks.

read more

Question Generation

This project aims to develop efficient algorithms and techniques for question generation and question answering.

read more

Causal and Analogical AI

In this research direction, we want to explore the key roles that causality and analogy play in NLP and machine intelligence.

read more

Concepts and Knowledge

This project focuses on Taxonomy/Ontology/Knowledge Graph construction and expansion.

read more

Document Intelligence

The general goal of this project is to develop document-grounded NLP systems to cope with real-world usage scenarios.

read more

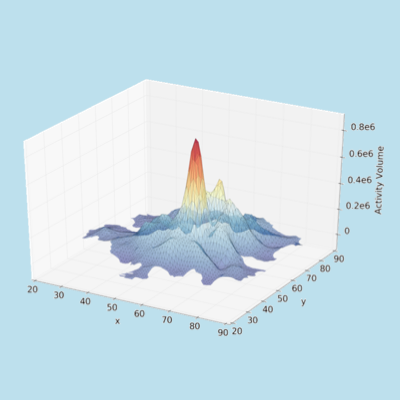

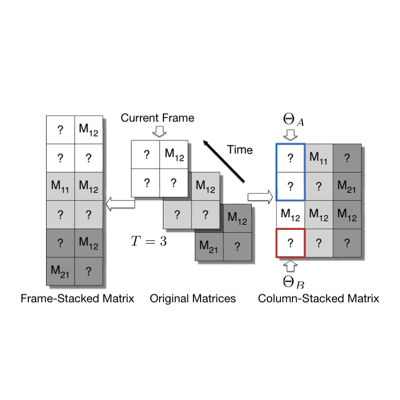

Spatial Data Analysis

This project is about spatial data analysis and recovery. We perform spatial recovery based on partial aggregated observations.

read more

Internet Latency Prediction

This project collected network latency data from the Seattle platform and developed new algorithms for network latency prediction.

read more